Employee Experience (EX) Consulting

In the wake of the pandemic, organizations face unique challenges in adapting to new working norms while addressing evolving employee experience (EX) needs. We recognize that cultivating a positive and engaging EX has become a strategic imperative for driving organizational success and enhancing customer value.

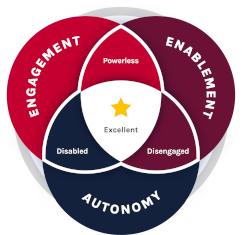

The Clearing’s Employee Experience

Improvement model, adapted from Itam

& Ghosh, 2020, focuses on three objectives:

The Clearing’s Employee Experience

Improvement model, adapted from Itam

& Ghosh, 2020, focuses on three objectives:

- Engagement

- Enablement

- Autonomy

Our EX improvement services begin with a comprehensive assessment of the current employee experience, utilizing surveys, interviews, and focus groups to understand organizational culture, leadership practices, and engagement levels.

We then collaborate closely with clients to develop a customized EX strategy tailored to their goals, focused on fostering workplace culture, promoting employee recognition and well-being, and implementing effective communication and feedback mechanisms. Frequently, we will implement technology solutions to streamline HR processes and facilitate remote collaboration to empower employees and enhance productivity.

Strategic Communications

The Clearing’s strategic communications consultants are skilled at developing communication strategies that work within the culture of the organization and meet stakeholder needs. Additionally, our strong graphics capabilities allow us to create compelling, concise, and impactful presentations and thought leadership products to support strategic communications initiatives.

Navigating Physical Workplace Change

The Clearing’s workplace change consulting model helps organizations navigate the entire corporate change lifecycle by defining the organizational vision, mission, and corporate strategy involved. We guide you from idea to implementation while minimizing distractions for the workforce and reducing the level of effort for leadership.

Specifically, our workplace consultants help organizations shape their culture by aligning your company’s physical space, people, technology, and business processes with cultural intent. to, This process helps your organization leverage technology and strategic investments to increase productivity and improve performance.

Balancing Risk, Safety, and Security

The Clearing’s management consultants deliver a unique, powerful, and highly successful approach to help organizations manage complex risk, safety, and security issues.

Our corporate culture consulting offerings in this space include:

- Security Executive Coaching & Executive Support

- Security Program Assessment & Implementation

- Security Program Communication Strategy Execution

- Risk & Security Culture Expert Witness Testimony

- Operational Change Impact Security Assessment & Mitigation

Improving Organizational Resilience

As leaders, how do we navigate uncertainty and risk while continuing to show compassion and illustrate strength and conviction across our organizations? The answer is organizational resilience.

Our organizational culture consultants will help you build resilience to keep your business healthy in the face of unavoidable shocks and stressors. We help individuals and organizations build better leadership models, management tools, and conflict resolution skills before a challenge occurs, better preparing your organization to deliver positive outcomes to difficult situations.

Corporate Culture Transformation

The Clearing exists to help leaders through difficult organizational culture challenges that require a transformation. Whether facing stagnation, a reputation crisis, customer dissatisfaction, or fragmentation, our consultants help leaders recognize that transforming organizational culture is part of the solution.

Our change management consulting approach begins by reviewing your organization from a broad internal and external perspective. This methodology involves large-group conversations that engage multiple stakeholders discussing the daily role that they play in shaping your organization’s culture.

Experiential Visual Design Services

The Clearing uses visual design to establish meaningful and impactful business outcomes for our clients through strategic storytelling.

This skill set allows your CX design consultants to distill complex and technical narratives into easily accessible visual products suitable for dissemination across traditional and digital communications.

Product Development Consulting

Our product development consultants support clients tasked with digital transformations, communications integration, and customer-centric technology improvements, helping organizations and government agencies to better react to their changing customer and federal priorities by creating enterprise-wide agility.

Our product development services include:

- Designing & Implementing a Product Management Process

- Human-Centered Design

- Wireframing to Rapid Prototyping

- User Experience (UX) / User Interface (UI)

CX IT Transformation Consulting

IT transformation efforts often fail when leaders do not fully consider the full range of the human aspects involved in implementation, which range from buy-in and adoption challenges to training and usability issues.

The Clearing’s IT strategy consultants provide the necessary change management, communication, and program and project management support during digital transformations and IT migrations that address the human side of change to deliver an improved customer experience. We partner with our clients to offer user-based requirements gathering, IT usability testing, training, communication products, and technology adoption strategies for large-scale technology rollouts.

CX Data & Research Services

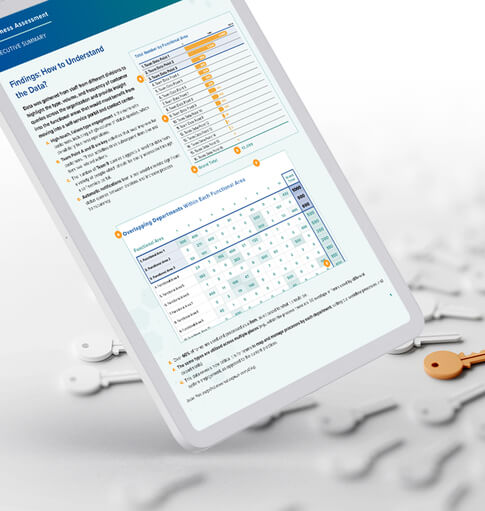

The Clearing’s CX data and research consultants use data analysis to understand complex operational systems and resolve observed challenges.

Our CX methodology analyzes available data to understand the current state of your system and then identify the key interventions that will help you achieve your outcomes.

Our team of customer research consultants can also help identify additional sources of data that will unlock additional insights and track key performance indicators to determine if designed interventions are working.

CX Strategy Consulting

Every single brand interaction shapes your customer experience. How do you know you’re delivering the experience they want?

The Clearing helps clients create an intentional customer experience (CX) strategy by defining, planning, and codifying an organization-wide customer experience improvement approach that supports your organization’s goals.

CX Organization Design

Organizational design is both an art and a science. Your organization’s cultural, structural, social, and technical elements serve as the building blocks that determine whether your processes and behaviors will support your organization’s ability to deliver the customer experience required to accomplish its mission and realize its vision.

The Clearing’s CX consulting services ensure that your organizational design focuses all available resources on the most important aspects of business and customer needs. Concentrating on the fewest, most critical elements will reveal an organizational structure that creates the space for leaders and employees to better serve customers and provide results.

CX Transformation & Change

Transforming your customer experience requires leaders to view their business through a customer’s lens and then make the incremental changes required to improve.

Our customer experience consultants help organizations transform their values, structures, operations, technology, and culture to mature their CX capabilities by creating an environment that focuses on the customer and delivers a high-quality customer experience at scale.

Brand & Identity Consulting

Your organization’s brand is a collective emotional and cognitive perception your customers form based on your customer experience strategy and their interactions with your people, processes, systems, and countless other interactions. To succeed, your brand must evolve to meet your customer’s needs at every touchpoint along their customer journey.

We offer a variety of brand services including:

- Brand Strategy Development

- Logo & Visual Identity Creation

- Brand Training

- Marketing Support

Leadership Training

Our interactive and experiential leadership training workshops provide participants the opportunity to reflect on and apply their learning to current challenges, strengthening their ability to manage complexity and competing priorities, guiding teams towards success, shifting high performers toward leadership roles, enhancing the development of seasoned executives, and more.

Our offerings include, but are not limited to:

-

- Accelerating Your Project Management Success

- Advanced Meeting Facilitation

- The Art and Science of Time Management

- Building an Intentional Culture

- Building Resilience

- Creating a Positive Customer Experience

- Designing and Leading Outcome-Driven Meetings

- Engaging in Compassionate Conflict

- Enhancing Customer Experience (CX)

- Giving and Receiving Effective Feedback

- Leading Through And/or Navigating Change

- Leading With Authenticity

Leadership Coaching Services

The Clearing offers a wide variety of customized leadership coaching services as a catalyst or complement to our business transformation services.

Our one-on-one leadership coaching supports managers and executives seeking to achieve personal and organizational success by aligning their behaviors and actions with those objectives and ensuring accountable progress toward those objectives.

Organization Design and Development

The Clearing combines data science, social science, and visual tools in a manner uniquely suited for the strategic transformation of federal systems through organizational design.

Designing and sustaining a strategic transformation requires a basis of theory along with a set of skills that reinforce people, processes, and systems as they change. Sustaining and thriving in change demands that leaders focus on four areas:

- Strategic Communications:

- Explicit Behavior Shifts

- Performance Indicators and Measures

- Multifaceted Risk Management

Program/Project Management

The Clearing’s approach to project and program management focuses on identifying and delivering the fewest, most important initiatives that will provide an organization with the best customer results and the highest growth.

Our certified project managers use best industry practices and our custom project management methodologies to create a program structure that provides clear governance, enrollment of stakeholders, strategic communications, and risk management roles to ensure successful outcomes.

Our management consulting approach aligns and builds trust among leaders and stakeholders and creates clarity on which initiatives should be tackled first. Our project managers then work with clients to drive the initiatives to successful completion.

Organizational Strategy Facilitation Services

The Clearing’s strategy facilitation services help clients design and run successful in-person or virtual strategy sessions that ensure the group’s goals are met.

Our facilitation sessions allow participants to exchange information, engage in meaningful dialogue, and discuss actionable matters. In each session, The Clearing’s strategy consultants will work to balance the passion of the group, the agenda, and the leaders’ needs to guide the group to the intended outcome. We facilitate senior leadership discussions, business meetings, and off-sites, as well as negotiations in and across organizations.

Agile Change Management

The Clearing’s agile change management consultants help our clients “lead through change” by:

- Assessing the organization’s current environment

- Diagnosing underlying issues that keep the organization from realizing its vision

- Implementing short sprints to develop testable, timely solutions

- Iterating quickly based on feedback.

Our agile change management methodology creates a shared perspective and shared intent among critical stakeholders, mitigates risks, enrolls stakeholders, and determines behaviors necessary to implement and sustain the change.

Organizational Strategy Implementation

The Clearing provides forward-looking management strategy consulting services to increase organizational effectiveness, growth, and innovation.

We combine our innovative strategic thinking with our client’s existing organizational strategy knowledge and expertise to develop pragmatic and actionable solutions to strategic challenges.

Our corporate strategy consulting services blend strategy and design with the critically important voice of the customer and build alignment across the organization to deliver lasting, meaningful, and actionable change.